From Elon Musk

to Stephen Hawking, these distinguished individuals have fairly voiced their

opinion against the fast emergence of Artificial Intelligence.

In fact, Musk

have even gone to the extent of claiming that there's “five to 10 percent

chance of success [of making AI safe]”, even advising companies working for AI

development to slow down.

On the other

hand, names like Mark Zuckerberg has been fairly positive about the overall progress

of AI, hopeful that it’s going to eliminate poverty entirely, along with

bringing other benefits.

Which group is right?

Honestly, a

rather boring answer is “both”.

Yes, AI can be a

great asset for the entire humanity. Imagine all the things that we all can’t

do. AI can do all those things much quickly and efficiently. They can solve

problems that we never could. And this idea itself is beyond lucrative to support

AI development.

However, in the

mix of ‘everything bright’ we shouldn’t overlook the disadvantages. First is

what if this advanced technology ends up in the wrong hands, like terrorists. Imagine

the destruction it can cause. We’re already living in constant fear of

terrorist attacks. If these bad guys get such advanced technology, it could be

a big setback on the security end.

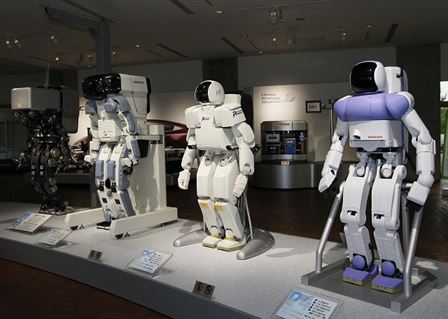

Now coming to

your exact question—yes, there is a possibility of AI powered robots taking

over the world. If we continue to develop AI thoughtlessly with less caution

and no plan B, we do live in danger. But if that ever happens, it’s going to be

likely a century from now. Will the world survive till then is a big question,

given the Global Warming and lack of collective effort from developed nations

against it.